How Did We Get Here?

When I joined the Culpa team at Columbia, I stepped into a project with an incredible history. For those who aren't familiar, Culpa is our student-run anonymous platform for professor and course reviews–think RateMyProfessors tailored specifically to our university.

The original developers had built something truly valuable that had helped countless students navigate course selection over the years. But like any long-running software project passed between generations of student developers, time had taken its toll.

What started as a clean, well-structured application had gradually accumulated those classic signs of a well-loved but aging codebase. Each team of students (including us) had added features and fixes over the years, but without consistent maintenance practices, technical debt had quietly piled up.

We were spending around $100 monthly across Digital Ocean and Heroku for infrastructure that struggled under peak traffic. We'd see the Sidechat posts when the site slowed to a crawl during registration periods, and we couldn't blame them–we felt the same frustration.

It wasn't that any particular team had done anything wrong. It was the natural evolution of a project handed down through generations of busy students, each just trying to keep things running while balancing classes, internships, and occasionally sleeping. But it was clear that Culpa deserved some dedicated love to carry it forward for the next generation of Columbia students.

System Design

The goal here was to design a system architecture that would be more robust, cheaper to deploy, and easier to maintain. Here's what we came up with:

Frontend: The user-facing application is built with React and lives in an S3 bucket, served through AWS CloudFront. A CDN like CloudFront is effective because it caches our static frontend files, meaning very fast load times for users.

API Gateway: This was a game-changer for us. The API Gateway routes requests from CloudFront to our backend services while enabling three crucial features:

- Response caching (most reviews don't need real-time updates)

- Rate limiting to prevent our servers from melting down when someone tries to scrape the entire site

- Automatic metrics collection so we can actually see what's happening in our system

Backend: A Flask application deployed on EC2 handles all the business logic. We leveraged Pydantic models for strict data validation—no more dealing with messy or invalid inputs.

File storage: To store PDFs, we now use pre-signed S3 URLs. When a user wants to upload or download a syllabus, our backend generates a temporary URL that allows them to communicate directly with S3—keeping large files out of our application layer entirely.

Tracking users while maintaining anonimity: Since Culpa is meant to be anonymous, we implemented a cookie-based solution to track user votes without collecting personal data. Sure, technically a determined student could clear their cookies to vote multiple times, but we'll hope not a lot of people enjoy doing this.

Protecting against DDOS attacks & rate limiting: One clever architectural decision was routing all traffic through CloudFront. This meant our frontend and API requests share the same origin, eliminating CORS request headaches when working with cookies. Also, this meant that we could setup IP-based rate limiting through CloudFront, which added another layer of robustness to our API.

Lessons Learned: The Engineering Wisdom We Will Keep For The Future

Infrastructure as Code

In hindsight, I'm a bit embarassed that I didn't see the point of Infrastructure as Code until recently. Creating constructs in AWS Console just seemed simpler...

Yet after trying it out on this project, the benefits are clear:

- We can recreate our entire environment with a single command

- Changes are tracked in version control, so we know exactly who broke what and when

- Creating staging environments is trivial rather than a week-long project

- Rollbacks are possible if something goes catastrophically wrong

We ended up with a system that costs around $25 per month to run (and would be even cheaper with AWS free tier, but unfortunately we've already used the 12 months for most of the services...) and that doesn't make me wake up in cold sweats wondering if today is the day the whole thing implodes.

CI/CD Speeds Up Development Enormously

Prior to our redesign, deploying new features was pretty scary. It would take ~ 1 hour of several manual steps, and there were plenty of opportunities to mess up and feel terrible about it.

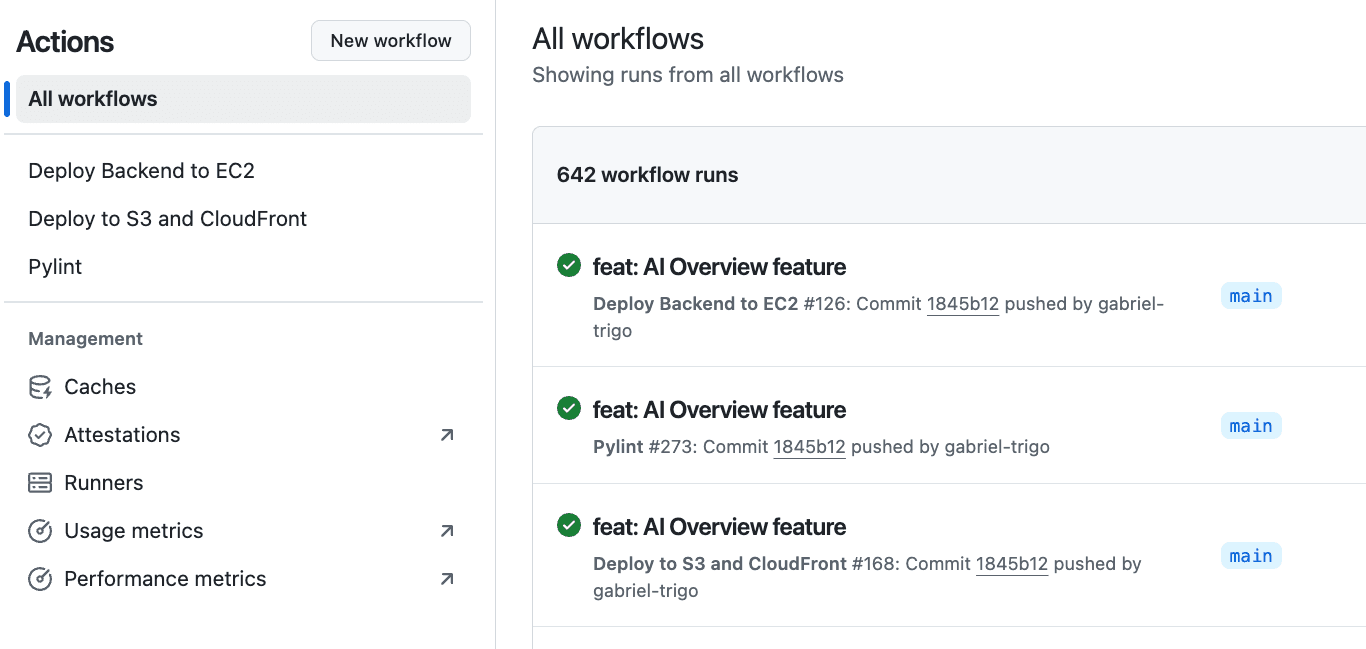

Implementing CI/CD workflows with GitHub Actions means that now our code is automatically tested, built, and deployed whenever changes get merged to the main branch. The feeling of seeing those green checkmarks appear after a successful deployment is better than any caffeine rush I've experienced during finals week.

Our deployment time went from ~ 1 hour to less than 5 minutes from code being merged to seeing the changes in the website, all working automatically. This has already saved us a lot of anxiety whenever we are pushing changes.

But maybe the most important lesson here is that: frequent deployments are way less scary than infrequent ones. I had the misconception that deploying a lot meant more chances to break things, but in reality, the opposite is true. The more often you deploy, the smaller each change is, and the easier it is to identify and fix issues. Also, very frequent deployments help to ensure that the deployment process is always correct and up to date (contrary to making one deployment every 6 months, where I had no idea if I was about to blow up everything).

Caching Improved our Backend Performance Dramatically

In previous semesters, Culpa struggled a lot during peak traffic periods (course registration). Loading pages often took seconds, or sometimes they didn't even load when the website crashed due to the demand.

To fix this, we decided to add caching to our most frequently accessed routes. Thinking back, this is an extremely obvious choice: professor reviews and course reviews barely change minute-to-minute (especially since our content is moderated), so it doesn't make sense to fetch this kind of data from the database on each request. This made a huge difference: average backend latency dropped below 50 milliseconds, even under peak traffic conditions, while our worst-case (99th percentile) response times typically remained under 300 milliseconds.

Observability

Previously, we had no idea what was happening in our system, or how it was performing. During course registration, we only found out that the website was crashing when we started seeing angry student posts on Sidechat. And even when we realized that our system was crashing, we were still left wondering: were we facing a bottleneck on our database, backend, or something else...?

This time, we implemented observability through our entire infrastructure with CloudWatch. Now, we have real-time metrics across every part of our system, which allows us to pinpoint exactly which component is misbehaving.

During this latest registration period, our data allowed us to monitor that our system was performing well even through peak load, which helped reassure us and avoid unnecessary scaling.

The Results: A Better Culpa For All

The redesigned Culpa is faster, more reliable, significantly cheaper to operate, and easier to maintain on our end. During the most recent course registration period, the site hummed along smoothly instead of crashing spectacularly.

Deployments transitioned from stressful manual processes to automated workflows that require minimal intervention. Data quality improved dramatically thanks to rigorous validation practices.

Most importantly, we've built something that future Columbia students can inherit without immediately wanting to throw their laptops out the window—breaking the cycle of technical debt that had plagued the project for years.